Worried About Bias in Star Ratings? Us Too.

One of the main reasons B2B technology buyers like you come to TrustRadius is to find unfiltered and unbiased product feedback from real users. Our research shows that while buyers rely on vendors for information during the purchase process, they also know vendors aren’t always forthcoming about a product’s limitations. That is a primary reason why buyers use peer review sites like TrustRadius to get the full picture and ensure they are making the right decision.

When it comes to B2B technology decisions, often thousands if not millions of dollars — and someone’s career — are on the line. We know it is extremely important for the reviews and ratings on TrustRadius to be authentic and unbiased. That is why we screen all reviews before publishing to ensure that the individuals contributing ratings or reviews are recent users of the product, with no conflict of interest, who are able to provide useful and detailed insights. We are the only B2B review site that has had these standards in place since launching our site in 2012.

But soon after launching, we noticed a different but equally impactful bias seeping into our data: selection bias.

When Vendors Drive Reviews

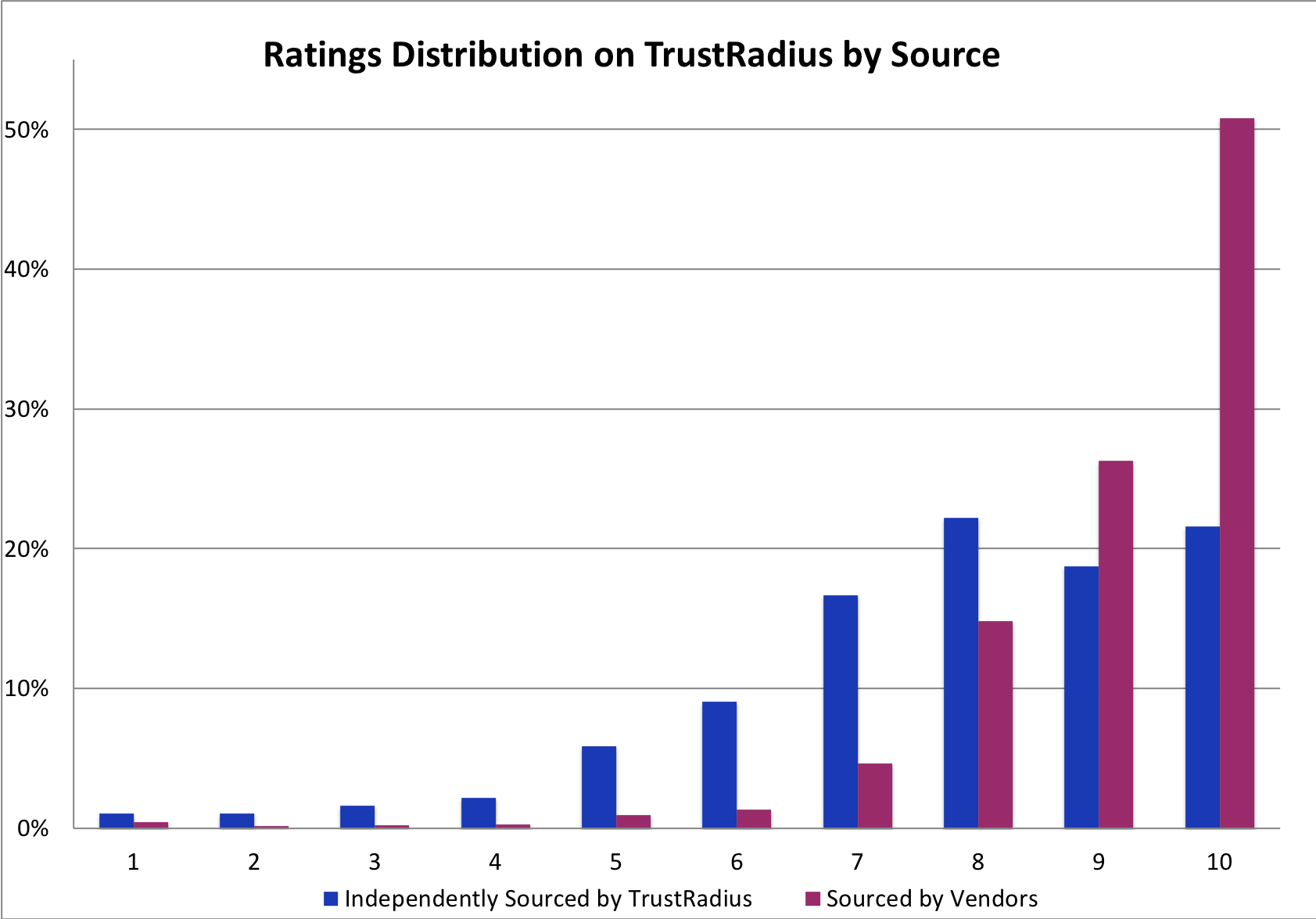

All reviewers on TrustRadius are real users offering useful insights for buyers. But when vendors invite their own customers to write reviews, the overall sentiment distribution looks markedly different than our own methods of acquiring reviews, which involve independently finding and inviting users.

Unsurprisingly, vendors generally invite their happiest customers to write reviews. This cherry-picking isn’t nefarious or fraudulent. For most vendors, asking a customer with mixed sentiment or a negative experience for a favor — let alone a favor that involves sharing their feedback in a public venue — seems counterintuitive. And happy customers do indeed provide useful and genuine feedback, as well as thoughtful suggestions for improvement.

But the experience of advocates doesn’t always represent the typical customer experience. So cherry-picking by vendors leads to misleading and, frankly, untrustworthy data in aggregate. Buyers are savvy — you know when the data seems skewed or too good to be true, or when a key perspective is outright missing. But untrustworthy data doesn’t help you decide what product to purchase.

Allowing cherry-picking to inflate scores on review sites is bad for vendors too. It creates a ratings arms race, meaning that vendors who want to gather authentic feedback from their customers won’t have a level playing field when they’re compared to competitors who have inflated their scores.

Most importantly, it makes it harder for you to find the information you need to buy with confidence. So we decided to do something about it.

What We Do to Address Cherry-Picking

1. We encourage vendors to invite all their customers, or a random sample of them, to review them on TrustRadius.

We take every opportunity to tell vendors that getting all types of customers to contribute reviews is not only best for buyers, but it actually benefits them as well. Our buyer research shows that buyers are aware no product is perfect, and they want to hear from middle of the road users who have detailed, balanced, and insightful feedback. As one buyer put it: “I find 2-4 star reviews can be the most helpful.”

Our research also shows that when buyers can’t find that kind of balanced feedback, they either keep looking or purchase with less confidence, thus slowing down the process for everyone involved.

Some vendors follow our advice. A number of vendors have proactively invited broad samples of their customers to write reviews on TrustRadius, and have discovered the value of being a truly transparent and customer-centric company.

But not all vendors are ready for that, so transparency around how reviews get sourced is also important.

2. We mark each review with important information about how the reviewer was discovered and invited.

We believe all reviews published on our site are useful. But knowing where they came from helps you decide for yourself whether or not you’re accessing the full spectrum of perspectives.

Every review is labeled to let you know whether the reviewer was:

- Invited by the vendor: This means the vendor invited the user to write a review on TrustRadius.

- Invited by TrustRadius on behalf of the vendor: This means the vendor enlisted TrustRadius to invite their customers to write reviews.

- Independently invited by TrustRadius: This means that the individual was invited as part of our independent sourcing methods of acquiring reviews (such as inviting members of our community to contribute reviews, or using external sources to find potential reviewers).

We also disclose whether the reviewer was offered an incentive to thank them for their time, to ensure transparency, as well as compliance with FTC requirements. Incentives are widely used in B2B reviews: they increase response rates, motivate reviewers to spend more time on their reviews, and can never be contingent on a particular response or sentiment. Research has shown incentives offered by the vendor may bias the reviewer, but incentives coming from a third-party like TrustRadius do not.

Finally, if the reviewer was invited by the vendor or by TrustRadius on behalf of the vendor, and we verified that a representative sample of customers were invited (i.e., no cherry-picking), we let you know in the review source label as well.

3. We correct for selection bias in overall scores with our trScore algorithm.

Vendors who cherry-pick can sometimes significantly inflate their scores. Many buyers see through this, but we want to ensure our data is accurate and easy for buyers to understand. Some buyers want to get a quick pulse on user sentiment and don’t have time to sift through how each review was sourced.

So we designed an algorithm that removes selection bias from a product’s ratings in aggregate. Scores are primarily driven by sets of reviews that are clearly from random and representative samples of customers.

The trScore algorithm also gives more weight to recent reviews and ratings. This ensures that as products and customer satisfaction change, the score does too.

What About Other Review Sites?

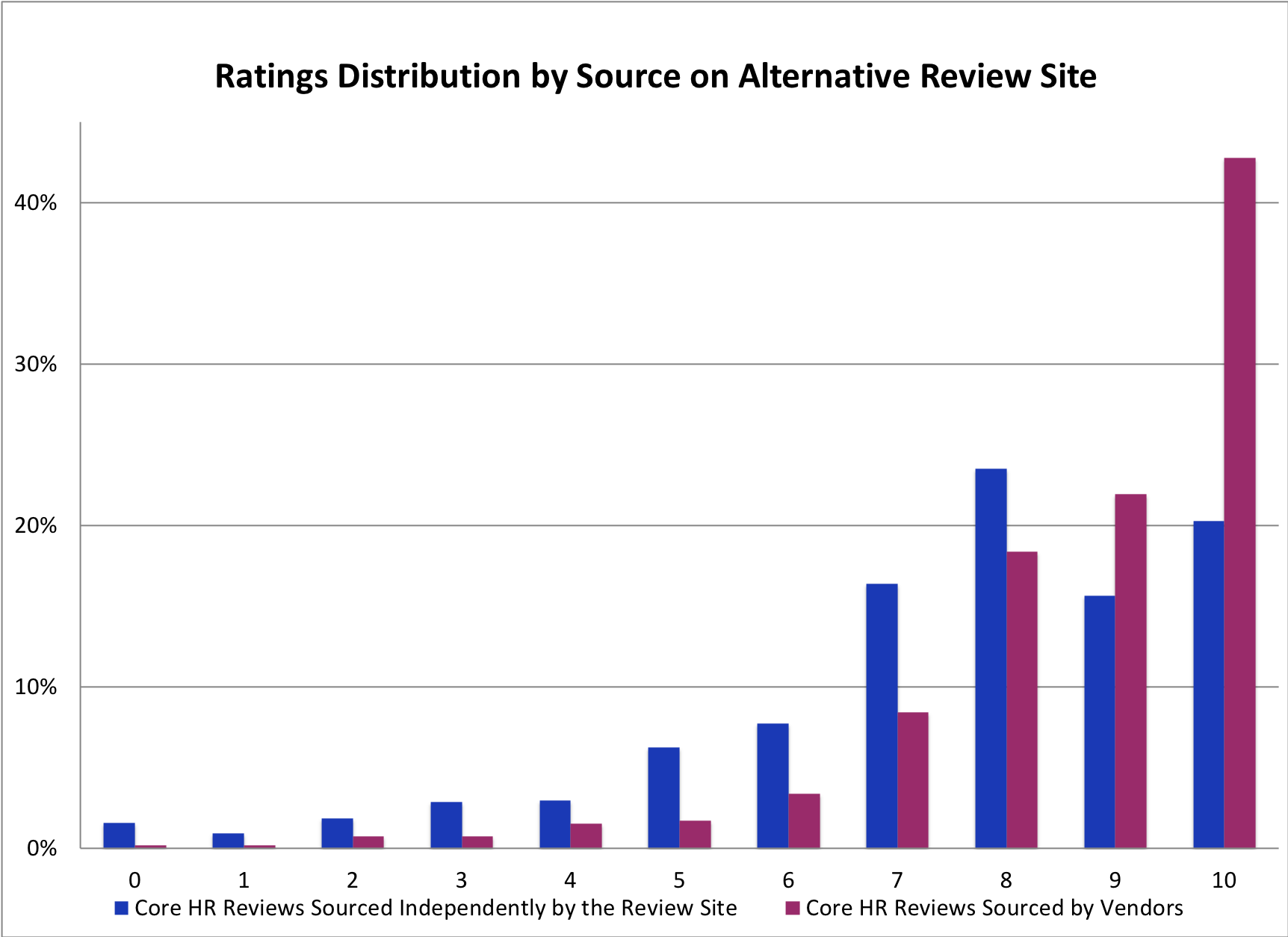

We analyzed data from another B2B review site that also discloses the source of their reviews. Our analysis revealed that the other review site experiences a similar dynamic: reviews sourced independently by the review site represent a relatively even distribution of sentiment, whereas reviews sourced by vendors are significantly more positive.

Here is a look at the distribution of ratings in a representative category, Core HR, on that site:

According to our analysis, vendors who choose to invite their customers to write reviews generally manage to increase their product’s score by about 5 to 15% on the other review site. But for products whose vendors don’t participate, scores are purely driven by the reviews sourced by the review site, and aren’t inflated by cherry-picking at all.

This matches what we’ve seen ourselves on TrustRadius. Before we implemented trScore, vendors who actively drove reviews were able to inflate their scores by about 10% on average. That may not sound like much, but compare it to letter grades — 10% on a 100-point scale is the difference between an A and B. And not everyone is graded on the same curve, since some vendors drive reviews and some don’t, so the resulting data is difficult to unpack. The result is buyers can’t easily tell how products compare, and have to do extra work to figure it out.

If you’re using other review sites in your research, make sure to check for review source and incentive disclosures. If there aren’t any, look at the histogram showing the distribution of ratings. If you see mostly 5-star and maybe a few 4-star reviews, then the data may be biased, and you’ll have to do some digging to find the information you want.

Feedback Welcome

We would love to hear from you. Do you see sampling bias as an issue on review sites? Is there anything else TrustRadius could be doing to ensure you’re getting the full picture of the products you’re researching? Send us your thoughts at research@trustradius.com.

Was this helpful?