6 Steps to Designing a Successful Survey

Our world is becoming increasingly data-driven. This is true if you work in the public or private sector. You will be asked to justify critical decisions with data. Even more so, technological advancements give us the opportunity to leverage data to make informed decisions.

Surveys are an incredibly helpful and common way to collect qualitative and quantitative data. But not everyone knows how to craft effective surveys that produce useful and reliable results.

Why is Good Survey Design Important?

If you’re wondering why establishing good survey design practices is important, it’s simple. Good surveys with good questions produce good data that can turn into valuable insights. Poorly designed surveys result in unreliable, shoddy, and low-quality data. Where good surveys inform smart decision making, bad surveys are worse than unhelpful. They can be actively harmful.

Having high-quality survey data is critical In the academic setting. It’s necessary for producing accurate and influential academic studies. Scholars that produce journal articles found to be based on shaky data can lose their funding and credibility.

Surveys are also used to conduct various types of research within the business world, including:

- Market research

- Competitive research

- Product feedback

- User experience research

- Employee experience feedback

- Customer feedback

Here too, having good quality survey data matters. Surveys that produce inaccurate data can lead to any number of negative business outcomes. A poorly constructed customer feedback survey may lead you to believe that your customers are way happier with your product or service than they truly are. This may negatively impact things like sales and renewal forecasts. In the worst case, bad survey data leads to bad decision making—resulting in lost revenue.

6 Steps to Designing an Effective Survey

Designing an effective survey is both an art and a science. But there are a few concrete steps you can take to make sure your surveys produce reliable and accurate results.

Here’s a step-by-step guide you can follow to design successful surveys:

- Define Your Goals

- Match Your Question Types to Your Goal

- Create Your Survey Questions

- Root Out Question Bias

- Determine Your Survey Flow

- Design and Test Your Survey

1) Define Your Goals

Set your goals and survey parameters before you even start thinking about which questions to ask. This includes understanding exactly why you’re running the survey. What business decisions will the results inform?

Let’s say you want to run a customer feedback survey. Make sure you nail down what specific type of feedback you’re looking for from customers. Perhaps your organization needs to collect net promoter score (NPS) data. Or maybe you’re looking for qualitative feedback about the overall customer experience. It’s vital to understand if you need quantitative data or qualitative responses.

Evaluating your NPS average requires quantitative data. Feedback about the customer experience will likely take the form of open-response (i.e. qualitative) feedback. Thus, the type of feedback you’re looking for will help dictate what type of survey questions you should create.

Consider how many topics you want to include in the survey. This will translate into how many questions the survey will need to be. Think about how many responses you’ll need to collect to feel confident in the results. Will you try to prove statistical significance with the survey data? If so, you’ll need more responses from a more representative pool of people than you would if you’re collecting anecdotal feedback.

2) Match Your Question Types to Your Goal

Once you’ve defined your goals, think about what types of questions will produce the data you need. Structured or close-ended questions will produce quantitative data that’s easier to analyze.

Although, unstructured or open-ended questions can produce insightful qualitative responses. These responses are typically text-based and can be thought of as anecdotal evidence. Nevertheless, analyzing open-response survey data is more time-consuming than analyzing structured quantitative data. This often involves manually going through open responses and hand-coding the feedback. As you can imagine, hand-coding a large number of survey responses can turn into hours’ worth of work!

To save yourself time, consider opting for close-ended question styles. These types of questions are also easier for respondents to answer than open-ended questions. Because they’re usually quick and easy to answer, they place a lower cognitive burden on the respondent. If you’re planning on creating a survey with more than 10 questions, it’s a good idea to have most of them be structured questions.

Though close-ended questions are easier to deal with in many respects, they’re not always the right tool for the job. If you’re looking to collect great quotes from your customers or employees—open-ended questions will work best. Open-ended questions also work well if you’re doing more generative or discovery research.

| Quantitative Data | Qualitative Data |

| Answers: “What, Where, How” and “Who” | Answers “Why” |

| Based on Numbers | Based on Opinions and Experiences |

| Larger Sample Sizes | Smaller Sample Size |

| Statistical Analysis | Interview & Observations |

| Objective | Subjective |

| Closed-Ended Questions | Open-Ended Questions |

| To Validate Hypothesis | To Generate Hypothesis or Develop Ideas |

Before moving on to writing up a rough draft of your survey questions, think about what type of demographics you need. Will you need to segment your data by things like age, gender location, race or ethnicity, etc.?

Make sure to include demographic questions for the most essential dimensions you’ll want to slice your data along. However, be aware that these can feel like personal questions. Not everyone may be comfortable answering them. A good way to encourage people to answer demographic questions truthfully is to let respondents know their feedback will be completely anonymous.

3) Create Your Survey Questions

Creating a written or typed draft of your survey questions will help save you time. You won’t have to keep redesigning your survey each time you edit a question.

There are several survey question writing best practices to follow, here’s a curated list of the most important ones:

- Keep survey questions short and easy to understand

- Ask one question at a time, no double-barreled questions

- Don’t use overly complex language

- Avoid using industry or academic jargon

- Keep answer options balanced

- Don’t ask leading questions, keep the language-neutral

- Try to get rid of, or correct for, any biases in your questions

- Only ask as many questions as you absolutely need to

- Rating scale questions can produce valuable data along a spectrum

- Use yes/no style questions selectively and strategically

- Images and videos can help explain certain topics, but they can easily become distracting

- Don’t crowd survey pages with too many questions

For a more detailed walkthrough of important survey question design tips, watch this youtube video:

While keeping these best practices in mind, identify exactly what type of question will yield the best results:

Open-ended questions

- Text-based: Respondents can type in a response and are not limited to choosing a predefined answer option. These types of questions produce unstructured response data.

Close-ended questions

** All of the following types of questions produce structured response data.

- Multiple-choice: Respondents pick one answer option out of many predefined options. These are often displayed as a list of options. Dropdown lists can be used for multiple-choice questions too.

- Multi-select: Respondents can pick multiple predefined answer options. These questions often prompt respondents to ‘select as many options as apply to you’ and are typically in a list format.

- Dichotomous scale: These questions only have two options for respondents to choose from. Typical answer pairs are: yes/no, true/false, and agree/disagree.

- Rating scale: These questions help gauge respondent sentiment towards a topic using a scale or range of options. 1-10, 1-7, and 1-5 scales are commonly used for rating questions. NPS questions are an example of rating scale questions.

- Likert: These are rating scale questions that gauge the respondent’s level of agreement or disagreement with a statement. Typically, these take the form of 5-point or 7-point scales that range from ‘Strongly Disagree’ to ‘Strongly Agree’.

- Rating slider: This is another type of rating scale question that allows respondents to physically drag the slider to a specific numerical value (e.g. from 1-10 or 1-5).

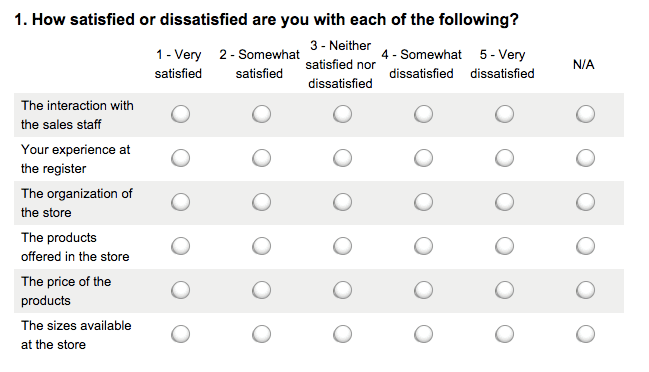

- Matrix tables: Respondents are asked to rate or evaluate specific row items using a set of column choices. This helps assess respondent preferences or feelings towards multiple items at once. See the example image below:

- Rank order: These questions ask respondents to order a single column of items in order of most to least important, frequent, or favored. Typically, these questions use a 1-5 scale, with the item in the number 1 spot being the most important.

- Dropdown: This is a form of multiple choice question that provides all answer options in a dropdown menu for respondents to choose from. These are typically single-select style questions.

- Demographic questions: These are typically multiple-choice or multi-select questions. They collect identifying information about individual respondents. They are often used to segment survey responses to compare results across groups. Each demographic question deals with a specific topic. These may include age, gender, location, company size, nationality, household income, race or ethnicity, etc.

Beware of survey fatigue when creating your survey questions. It can lead to higher respondent drop-off rates. If someone becomes tired and frustrated when taking your survey they’re also less likely to respond truthfully. Keeping your survey short is one of the best ways to increase survey completion rates and protect the integrity of responses too.

4) Root Out Question Bias

There are multiple different types of bias that can find their way into your survey questions. Some of them are so subtle that you may not even realize your survey questions could elicit biased responses.

So after creating your survey question draft, go over each question with a fine-toothed comb and look for any potential biases. The table below goes over some of the most common types of response bias:

| Type of Bias | Explanation | Solution |

| Acquiescence Bias | The tendency for respondents to agree rather than disagree with a statement, even if it’s not an accurate reflection of how they feel. | Don’t ask agree/disagree style questions. If you must, include a similar question that has the opposite scale so you can compare responses to these questions. |

| Social Desirability Bias | The tendency for respondents to respond in a way they think is socially acceptable or expected. This can lead to under-reporting ‘bad’ behaviors and over-reporting ‘good’ ones. | Phrase questions in a way that doesn’t make it seem like there are only 1 or 2 socially acceptable answers. Communicate to respondents that their answers are completely confidential. |

| Question Order Bias | When the order of questions influences respondents’ answer to subsequent questions. | Pay close attention to how you order your survey questions. Start with broader, more general questions and get more specific later on in the survey. Ask more personal questions later in the survey. If it makes sense, randomize the question order. |

| Primacy Bias | For multiple-choice questions, the tendency for respondents to pick the first answer option that is provided. Even if it’s not a 100% truthful answer. | Randomizing answer option order helps combat primacy bias. |

| Recency Bias | The tendency for respondents to pick the most recent (usually the last) answer option. | Randomizing answer option order helps combat recency bias. |

These types of response bias can ultimately lead to collecting back survey data. There are also a few types of questions that can produce questionable survey responses. Below are three types of questions to avoid asking in your surveys:

- Leading questions

When you lead respondents to the “right” answer by wording the question a certain way. The example below makes it seem as if ‘struggling with product issues by yourself’ is not the right answer option. Thus, the respondent is led to respond that they would rather work with the customer support team.

Example question: “Would you rather struggle with product issues by yourself or work with our super-responsive customer support team?”

The best way to avoid asking leading questions is to make sure you don’t point respondents to a ‘right’ answer. Use neutral language. Present answer options as equally valid responses.

- Loaded questions

This is when you ask a question that forces the respondent to give a certain type of answer. In the example below, this question assumes the respondent has interacted with customer support and forces them to answer as if they have. But this becomes problematic for people who have never reached out to your customer support team.

Example question: “How would you rate your experience with our customer support team?”

To purge any loaded questions from your survey, use question logic to make sure only qualified respondents that have the right type of experience see these questions. Alternatively, include answer options for people that don’t have this type of experience. So in the example above, ‘I have never interacted with customer support’ should be an answer option.

- Double-barreled questions

When you ask more than one question within the scope of one survey question. In the example below, respondents are being asked to rate their satisfaction with the job and their salary.

Example question: “How satisfied or unsatisfied are you with your current job and salary?”

This issue has an easy fix: only ask one question at a time, even if the topics are related.

5) Determine Your Survey Flow

Survey question order is almost as important as the question wording itself. Your survey flow can impact the type of responses people provide. It can also contribute to question order bias (see the table above for more details). Order your survey questions smartly and strategically. This will help minimize any effect the question sequence could have on responses.

Generally, your survey should start with more general, easy-to-answer, non-personal questions first. The middle of your survey is a good place to introduce more complex questions or ones that place a higher cognitive burden on the respondent. Open-response and matrix-style questions are a high cognitive load for respondents. Multiple choice, rating, and scale questions are great examples of questions with a low cognitive load.

Save more personal questions (typically demographic questions) for the end of your survey. The end of your survey is an ideal place for open-text ‘wrap-up’ style questions. For example, if you’re running an employee feedback survey, a great last question might look like this:

“Is there any other feedback you would like to give to your manager or the executive team?”

6) Design and Test Your Survey

Once you’re done perfecting the questions, it’s time to design your survey. For most people, this means manually setting up the survey questions within a survey platform interface. A simple form-building tool may do the trick as well. Form building tools can work well for:

- Short and simple surveys (between 1-5 questions)

- Informal surveys (when you won’t be collecting sensitive information)

- Low-stakes survey (when survey results won’t influence important business decisions)

On the other hand, longer and more complex, formal, and high-stakes surveys usually require a more robust survey platform. These types of tools will have more advanced result analytics capabilities and more varieties of question types. Some even allow you to purchase survey participants from within the platform.

Double-check that you’ve set up any conditional, branching, or skipping question logic correctly. After you’ve finished designing your survey, wrangle some friends or colleagues to help test it.

Many survey platforms allow you to send a preview or custom link to survey testers. Some also allow testers to provide feedback about the survey questions and design within the testing interface. If not, creating a document for testers to leave their feedback in can be helpful.

With the survey design and testing wrapped up—It’s time to launch your survey! (Almost.)

Before sending your survey out into the world, ask yourself the following critical questions:

- How many responses do you need? Should you set a survey response cap?

- What type of respondents do you need?

- What pool of survey takers do you plan on reaching out to? Do you have access to them?

- Should you include an incentive? If so, how much?

- What channel will you use for survey invitations? (E.g. email, social media, etc.)

- What’s your timeline? Is there a hard deadline you need to have all responses collected by?

Survey Tools for Every Use case

There are tons of survey and form-building platforms to choose from. They range from free to very expensive, so make sure you invest in one that fits your unique research needs.

Some great free tools to consider include:

But be aware that free tools typically have limited capabilities. Some platforms only allow you to collect a certain number of responses or ask up to a certain number of questions. Others may have a more limited selection of question types to choose from. They may also not have advanced survey analysis tools. Free products can be great for less complex, shorter, and lower-stakes surveys.

Other survey platforms to consider for more complex or advanced survey projects include:

These platforms will be more expensive but will provide you with the tools you need for in-depth survey analysis. Most also allow for an unlimited number of questions per survey. Check out the TrustRadius Survey & Forms Building Tools page to compare across different survey products.

Was this helpful?